There’s this old bridge that I would visit a lot as a kid. Nothing particularly special about it — just a mid tourist attraction for people afraid of heights.

Over the years, when I would visit my family for the holidays, I would go back to this bridge for a smol hike.

Different eras of me revisiting this old bridge. The bridge always remained the same, but I changed.

This bridge is my yardstick so to speak — a point of measurement and reflection.

Now, I’m revisiting the proverbial bridge that is the cross-chain problem. So much has changed since I originally wrote my piece in December. Not only had the space changed, but so had I.

I moved halfway across the globe. I founded a company. I even found time to get two new tattoos.

As a result, my thinking on interoperability has changed as well. New ideas, new mental models, and new hypotheses on what the end game for interoperability will be.

My answer: More so than ever, we need to prioritise the safe and scalable design of bridges because bridges touch everything.

Yes, bridges have always been important in a “multi-chain world”, but I think — in a modular future—they have come to encompass all facets of crypto.

As a result, interoperability gets levelled up from being Important to We Absolutely Need to Solve This Or We’re Screwed.

The trillion-dollar question still remains for bridges: How do we safely move information from one chain to another?

We’ll unpack this together: where are we moving information from (i.e., wtf is even a chain these days), how do we verify correct information, and what this actually looks like in practice.

The Where: Bridging in Modular

Everything is modular now, and it makes everything way more complicated.

Last time I wrote about bridges we only discussed them in the context of monolithic blockchains.

Things are simple in a monolithic world. Monolithic blockchains have a homogenous state, so the verification of state transition can be done through running a full node of the chain or verifying a single consensus proof.

However with modular blockchains, there are three (or four) separate components that need to be verified in order to sufficiently convince a third party of a state transition.

As a result, bridges would need various light clients to verify the respective layers of the modular blockchain stack:

- Execution: Includes the virtual machine, which runs the code of smart contracts and executes the state transition functions.

- Transaction Ordering (TO): Responsible for the canonical ordering of transactions/state transitions from the execution layer.

- Data Availability (DA): Responsible for storing and distributing the data associated with each transaction. This layer ensures that the data is available to all nodes on the network.

- Settlement: Responsible for verifying transactions and ensuring “finality” (we’ll revisit this later in the post).

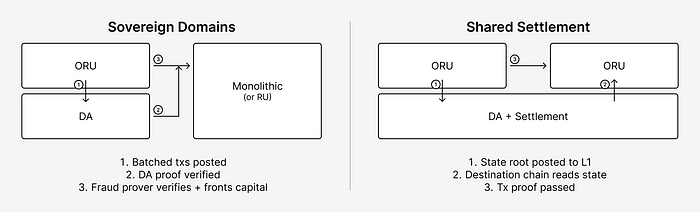

This additional complexity opens up the design space for verifying a transaction across domains.

If two domains share the same settlement layer (e.g., smart contract rollups on top of Ethereum), the destination domain can just read the state root of L1 in order to verify the state transition.

If two domains share the same DA layer (i.e., Celestia, EigenDA, Avail or an enshrined layer), the destination domain will need to verify that the data is available before verifying that the state transition is valid.

The same can be done with domains that share the same TO layer (i.e., a shared sequencer like Astria or Espresso). A proof of ordering needs to be checked before verifying the state transition.

In a modular environment, various layers must be verified before the execution layer’s verification can begin. In order to do so programmatically, we create a conditional light client.

Cross-chain is difficult to say the least, and as the crypto ecosystem becomes more modularised, it’s become increasingly difficult for a bridge to ensure the security and reliability of cross-chain transactions.

The How: Achieving “Finality”

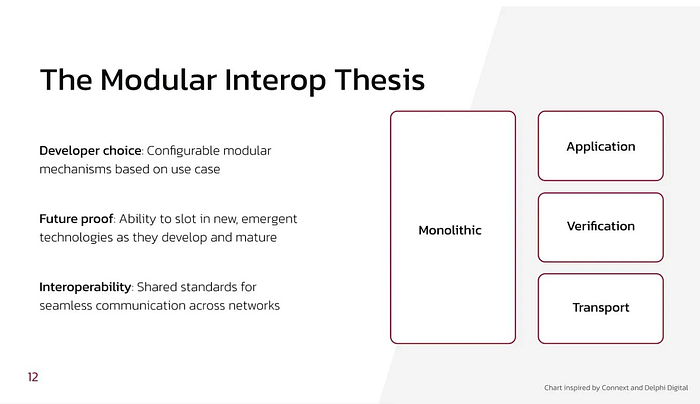

As with modularising blockchains, modularising bridges adds more moving pieces to the equation.

I’ve written about how bridges can overcome the interoperability trilemma through modularisation — splitting up the job of bridging into three components: application, verification, and transport.

Modular interoperability protocols can have shared application and transport layers in order to inherit the existing application-level specifications (e.g., ICS standards like ICS-20 (fungible tokens), interchain accounts, and interchain queries) and routing specifications.

Moreover, modular interop protocols can configure the verification layer — leveraging multiple validation mechanisms and also allowing for programmability on use case, monetary amount of transaction, latency of transaction, etc.

Bridges have utilised five methods in order to verify a transaction:

- Direct external validation: entities run a full node off-chain and vote on the validity through a multisignature scheme (Team Human / Team Economics from my previous post)

- Optimistic external validation (i.e., faux optimistic): relayers pass any arbitrary transaction that are viewed in an “Outbox” contract and watchers flag fraud by running full nodes off-chain (Team Game Theory)

- Consensus proofs: recognition that an honest majority of validators have confirmed and signed a valid transaction (Team Math)

- Fraud proofs (i.e., real optimistic): anyone can submit a cryptographic proof to challenge an invalid state transition that has been submitted by a rollup operator

- Validity proofs: generation of SNARKs that verifies that a transaction in a rollup is correctly computed and therefore valid

Four layers of a modular blockchain. Five methods of verifying a transaction.

In a modular world, bridges need to mix-and-match various verification methods with various parts of the blockchain stack in order to pass a valid message.

Now you can start seeing how complicated this gets.

So what happens after verification? Then a transaction is considered “final”.

Finality is the assurance that a transaction is truly irreversible — whether strictly defined in a blockchain’s consensus mechanism (e.g., Tendermint) or through a statistical threshold of re-organisation likelihood (e.g., Bitcoin PoW).

Finality provides certainty and security and is especially important in a cross-chain context because of — as Vitalik wrote in his infamous Reddit post — the inability for rollbacks to be coordinated across zones of sovereignty. As a result, erroneously verified and passed transactions can spell the death of a blockchain.

However, finality gets complicated a modular context.

What even constitutes a transaction (e.g., the validity proof and the DA proof) and what qualifies as a high probability of finality (i.e., different settlement, DA, and sequencer finality rules)?

If a bridge were to follow the definition of finality to a T, it drastically degrade user experience.

In the case of optimistic rollups, bridges would need to wait for the 7-day challenge period before passing a “finalised” transaction to the destination domain. This can result in significant delays in accessing cross-chain funds and messages — which is an absolute no-go from a user experience perspective.

We need to balance the notion of finality with a good user experience.

Verification of finality needs to be a spectrum —called “soft” finality or soft confirmations — with different verification mechanisms creating different definitions of how “soft” finality is.

In my previous post about cross-chain bridging, I discussed the importance of abstracting the finality risk away from users. To date, we’ve seen liquidity networks that provide users with access to fast liquidity via pre-confirmed finality: Hop Protocol, Connext Network, Across Protocol, and Synapse AMM.

Entities in these networks (called bonders, routers, or another moniker) use various methods to verify transactions and put up their own capital in exchange for a fee. By doing so, they take on the finality risk themselves and offer users a faster and more seamless cross-chain experience.

The What: Verifying In Practice

Modular stack, modular bridges, soft finality… given everything that we’ve discussed, what does verifying a cross-chain transaction actually look like in practice?

The short, unsatisfactory answer is that it depends ¯\_(ツ)_/¯

It differs widely depending on the configuration of execution, transaction ordering, data availability and settlement layers — as well as whether two domains share the same underlying layers.

Instead of tackling it by each permutation, I’ll summarise it into three buckets based on the form of verification use: consensus, fraud, and validity proofs.

The Tried-and-True: Proof of Consensus

For simplicity’s sake, we in theory could have a consensus proof to verify nearly every modular configuration.

Here’s how it would work: the destination chain would have light clients for every part of the stack, such as execution, TO, DA, and settlement.

The validators for each respective layer would sign to include the transaction into their chain, and the destination chain would verify this.

For execution layers that have their own full nodes running direct proofs (like a pessimistic rollup), we could simply use a consensus proof with a proof of data availability (which is also a consensus proof in some implementations).

In cases where the execution layer has a settlement layer (like an optimistic rollup on Ethereum), the settlement layer becomes the source of verification — where a consensus proof including the execution layer’s state root will determine the validity of the state transition.

The downside to using a blanket consensus approach is latency.

For smart contract rollups with a settlement layer, there is a delayed cadence of transactions being batched and proofs to be posted onto L1. This can result in longer wait times for users.

Moreover, some consensus mechanisms are slower to reach consensus than others, such as Ethereum Gasper compared to Tendermint/CometBFT.

And for mechanisms that use a probabilistic finality gadget, there is more uncertainty around what is considered “final” due to the possibility of a N-block-deep re-org.

What if there’s no consensus? Then we move on to the next two buckets 🫡

The Occasionally Helpful: Fraud Provers for Soft Confirmation

Certain execution layers rely on other layers for transaction ordering and data availability, and use fraud proofs to ensure transaction validity. Let’s give them a name: sovereign optimistic rollups.

In this case, there is no settlement layer to canonically determine finality for the execution layer — thus, we can’t use an L1 consensus proof (there’s also no full nodes in this scenario).

This is where “soft finality” comes in handy.

Optimistic verifiers, also known as fraud provers, are responsible for reviewing the transaction and passing it on to the destination domain if no fraudulent activity is detected.

Since they’ve assessed the risk of fraud and a potential rollback upfront, they take on the finality risk and allow users to bypass the 7-day challenge period.

If an execution layer were to ever publish an invalid state transition, fraud provers can submit a fraud proof for dispute and also elect to not front capital for a transaction in order to avoid damages from a rollback.

This is the effective role of entities in platforms like Hop, Connext, and Across, who validate messages and front capital for users’ cross-chain financial transactions.

Dymension has eIBC — a similar concept on general message passing.

On the destination domain, there is a conditional light client that will only process the fraud prover’s transaction if a DA proof and TO proof are posted and verified as well.

The End Game: Validity Proofs

The bridge verification process is streamlined when execution layers use ZK proofs to verify validity.

ZK proofs are proof of computational correctness — meaning that a transaction’s state transition function was executed and determined to be correct.

Bridges can then simply pass the validity proof to the destination domain, which still needs conditional light clients for DA and TO.

It was previously thought that bridges would need to use a consensus proof on the settlement layer for ZK rollups — with the same issues of finality that we’ve seen before.

“Soft confirmation” was a potential solution — where bridges don’t wait for the validity proof and transactions/state diffs to be posted on settlement layer and instead just pass the validity proof as it’s generated— but only works when there is a single centralised sequencer with canonical ordering rights.

In a decentralised setting of multiple sequencers, transaction data availability becomes crucial to determine the correct inputs for the validity proof.

Thus, a new model has been proposed by Sovereign Labs that bypasses the settlement layer in order for achieve fast finality.

Real-time validity proofs are created — with recursive proofs generated for each subsequent block based on the rollup’s self-determined fork-choice rule.

The recursive proof can be passed to the destination domain in real time, rather than waiting for validity proofs to be posted into a settlement layer.

Finally, the recursive proof is batched and posted to the settlement layer in a cadence that makes economic sense for verification and “finality”.

Keep in mind — this approach works best when two domains share the same ZKVM or a shared prover scheme.

Implementing a verifier of one scheme in a different one won’t be as performant due differences in the size of the field that’s being operated in.

zkSync Hyperbridges and Slush on Starkware are supercharged configurations of validity proof + shared settlement (what Vitalik calls state root bridges).

A transaction is executed in an L3/4/etc, and the state root is eventually settled on Era (the zkSync basechain) or StarkNet. The blockhash is read by the destination domain to prove that the transaction actually happened.

An issue with this approach is — again — latency, which is mitigated through a shared prover mechanism (the zkVM core spec) and through fast liquidity swaps like Connext that abstract away the finality risk, as we discussed before.

Another challenge is the high cost of on-chain verification. For Ethereum, the cost to verify a ZK proof is anywhere from 300K (typically Groth16 proofs)–upwards to 5M gas (STARK proofs).

While the exact costs vary, it’s universally acknowledged that the expenses can add up substantially — with costs quoted from $10M to $100M per annum depending on user transaction load and the price of gas tokens.

Rollups have the benefit of batching transactions into one periodic proof submission (as seldom as once per week) in order to reduce the per-transaction cost, but latency-sensitive use cases like bridging don’t have the same luxury — making it even more costly.

However, this issue of high on-chain verification costs can be mitigated to some extent by leveraging destination domains that offer more cost-effective block space (e.g., not Ethereum).

Simplifying the Madness

If you’ve made it this far, you’ve probably come to the same realisation as me: this is complicated as fuck.

Luckily not irreparably so.

We can streamline the cross-chain problem by using MMA: Multi-Message Aggregation.

MMA uses multiple bridges as a redundancy against malicious behavior and erroneous message passing.

For instance, Hashi by Gnosis aggregates bridges and has them pass the same message. If the same message appears on the destination domain by multiple providers, then it’s considered correct. If different messages appear, then it’s resolved through a dispute resolution process.

Another approach has been proposed by Kydo and will be implemented for Uniswap governance, which conceptualises various bridge implementations as clients of an underlying interoperability protocol.

By using multiple clients and ensuring diversity of clients, interoperability becomes more resilient. This approach allows for the use of multiple bridge implementations while also providing an overall framework for interoperability.

To simplify things further, we can use a Hub-and-Spoke model, leveraging multi-hop bridging in order to reduce the complexity of N origin chains communicating with N-1 destinations.

Cosmos has long been a proponent of this approach — as seen through the design of the Cosmos Hub and new entrants like Dymension and Saga.

Polymer also wants to be the multi-chain routing hub, building a chain that will host ZK light clients and optimistic fraud provers in order to verify transactions from any modular configuration.

It’s All About Bridges

Through my research and time spent on bridges, I’ve come to realise that it’s really just bridges all the way down.

Think about it.

How are blockchains able to function in a modular paradigm? They need to coordinate across domains using bridges.

Settlement layers are also just bridges.

In a rollup-centric world, settlement layers are outsourced block verification and “finalisation” of a block. Rollups (via their canonical bridges) post proofs in order to be verified by another — hopefully more secure — validator set.

Wait— verifying proofs from another domain… that sounds like a bridge!

Viewed from another lens: multi-hop bridging hubs like Polymer are just settlement layers. They’re chains that verify through proofs the validity of messages being passed from other domains.

Shared sequencers are all the rage right now. Outside of decentralisation — their main selling point is coordinating cross-rollup transactions and capturing cross-domain MEV.

Coordination between rollups? That sounds like a bridge!

Speaking of rollups, they aren’t even real. You can have smart contract rollups with forced inclusion into a settlement layer, sovereign rollups with lazy execution, single node rollups with shared sequencing, etc.

Really makes us think on what even is the notion of a rollup — if it’s so variable. The only people who need to care are the ones interpreting and verifying the information created from rollups… which are bridges.

I guess the reason why I initially fell in love with the interoperability design space is that it really is all encompassing.

MEV is a really interesting topic, but becomes much more fascinating in a cross-chain context.

Scalability and modularity is a tough problem in of themselves, but their ramifications on cross-chain design makes it all the more worthwhile to research and explore.

As the space continues to evolve at a rapid clip, I’m sure I’ll revisit this piece again… and again and again.

After all, bridges have always been my opportunity for reflection.

Bonus: Unrelated Musings

There are additional areas of exploration worth considering that I didn’t have energy (or scope) to cover on this post.

Shared security

What are the implications of using shared security primitives?

Replicated security (e.g., Interchain Security from Cosmos Hub) allows for multiple chains to share a common security mechanism, reducing the need for each chain to bootstrap and maintain its own security measures. Are there ways to streamline cross-chain communication in this paradigm?

Mesh security is bi-directional security derived between two participating domains (e.g., Mars and Osmosis networks) — and can be scaled to N domains.

These chains can validate one another’s state using validity proofs or consensus proofs — as as well validate one another’s data availability. This approach could revolutionise cross-chain bridging, offering a more democratised, resistant way to verify transactions.

Borrowed security (via EigenLayer or Babylon Chain) allows for an augmentation of security — adding to the security of a chain’s existing validator set.

For EigenLayer restaking, an additional conditional light client would need to be configured in order to verify consensus proofs from EigenLayer restakers in addition to validators of an underlying chain. The same would be true for borrowed security from Babylon Chain’s checkpointing of Bitcoin.

User-activated soft fork

A relatively new concept related to the notion of sovereign rollups.

If one chain is having its cross-chain transactions censored by another chain, the origin chain can “soft fork” the destination chain in order to bypass the censorship.

Only requires one honest full node on the destination chain in order to perform this soft fork.

Acknowledgements

Thanks to conversations with Bo (Polymer), Yi (Axiom), Ismael (Lagrange), rain&coffee (Maven11), Cem (Sovereign), Omar (Matter Labs) that expanded my brain.

Thanks to Tina, Aditi, David, and pseudotheos for providing feedback on my post :)